Dataset

Our dataset includes more than 40,000 frames with semantic segmentation image and point cloud labels, of which more than 12,000 frames also have annotations for 3D bounding boxes. In addition, we provide unlabelled sensor data (approx. 390,000 frames) for sequences with several loops, recorded in three cities.

Semantic segmentation

The dataset features 41,280 frames with semantic segmentation in 38 categories. Each pixel in an image is given a label describing the type of object it represents, e.g. pedestrian, car, vegetation, etc.

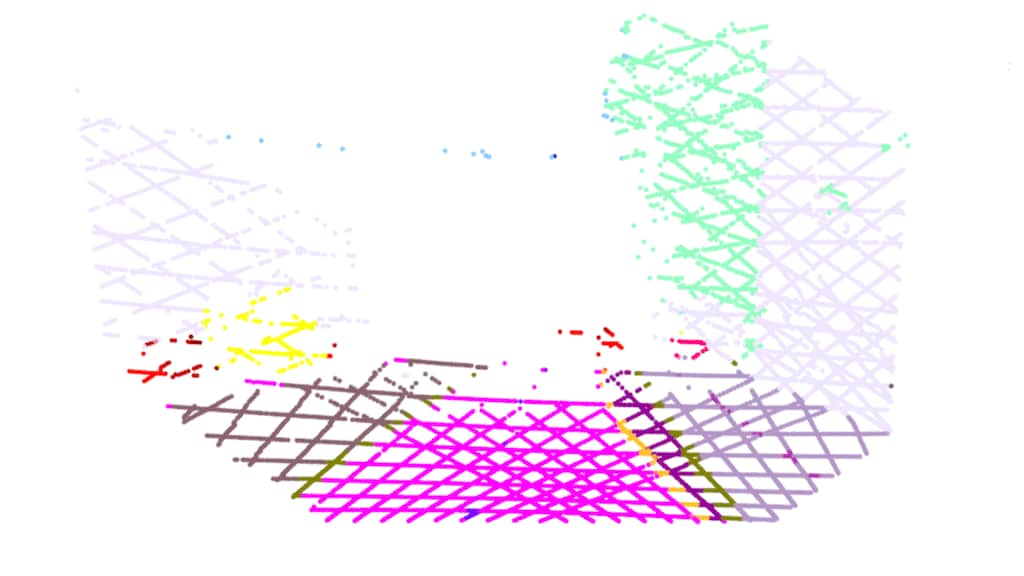

Point cloud segmentation

Point cloud segmentation is produced by fusing semantic pixel information and LiDAR point clouds. Each 3D point is thereby assigned an object type label. This relies on accurate camera-LiDAR registration.

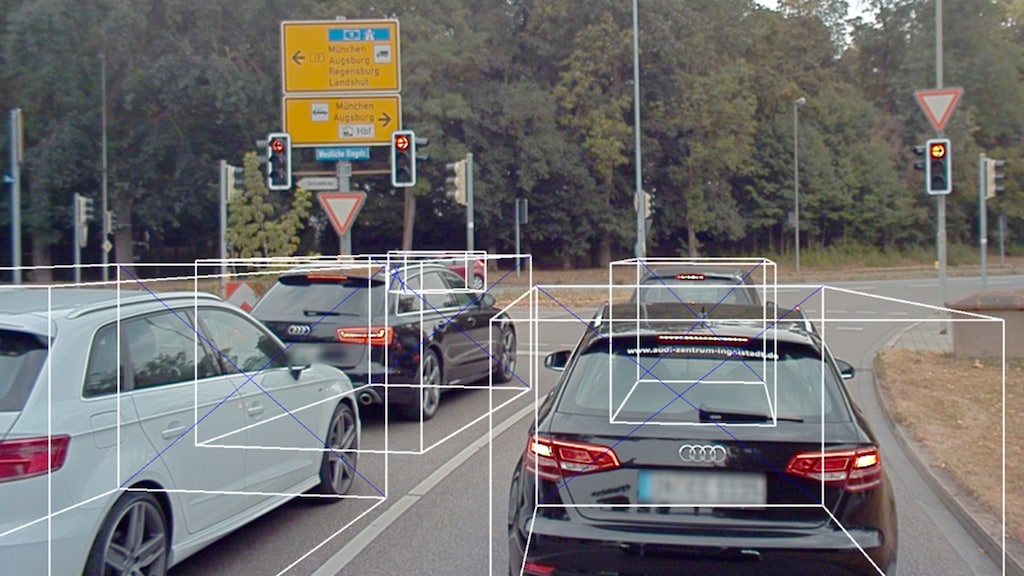

3D bounding boxes

c3D bounding boxes are provided for 12,499 frames. LiDAR points within the field of view of the front camera are labelled with 3D bounding boxes. We annotate 14 classes relevant to driving, e.g. cars, pedestrians, buses, etc.